Difference between revisions of "Kinect"

| (2 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

| − | + | [[File:kinect.jpg|thumb|300px|plux biosignals]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | == | + | The Kinect is a motion sensing input device. Based around a webcam-style add-on peripheral, it enables users to control and interact with their computer by retrieving joint information. Microsoft enables a non-commercial Kinect SDK for gestures and spoken commands. |

| + | |||

| + | |||

| + | == Kinect V2 == | ||

| − | The | + | The device features an RGB camera, depth sensor and multi-array microphone running proprietary software, which provide full-body 3D motion capture, facial recognition, voice recognition and acoustic source localization capabilities. |

| − | + | The depth sensor consists of an infrared laser projector combined with a monochrome CMOS sensor, which captures video data in 3D. Kinect is capable of simultaneously tracking up to six people. sensors output video at a frame rate of 15 Hz(low light) to 30 Hz. The default RGB video stream uses 8-bit VGA resolution (512 x 424 pixels) with a Bayer color filter, but the hardware is capable of resolutions up to 1920x1080 @30fps colour image. The Kinect sensor has a practical ranging limit of 1.2–4.5 m distance. The Kinect can process 2 gigabytes of data per second, so it uses USB 3. The SDK runs only on Windows 8. | |

| − | + | Look at the color depth :) | |

| − | + | [[File:KinectColor.jpg|frame|left|300px|Color Depth Image]]<br clear=all> | |

| − | + | You can retrieve this joint information as well as finger information. | |

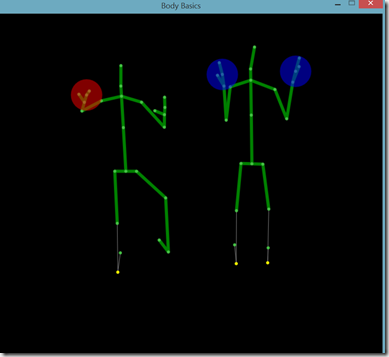

| − | + | [[File:informationJoints.png|frame|left|300px|Joint Information Screen]]<br clear=all> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Presentation software == | == Presentation software == | ||

| − | + | <syntaxhighlight lang="text" line> | |

begin; | begin; | ||

| Line 112: | Line 103: | ||

end; | end; | ||

end; | end; | ||

| − | </ | + | </syntaxhighlight> |

Latest revision as of 11:11, 9 May 2023

The Kinect is a motion sensing input device. Based around a webcam-style add-on peripheral, it enables users to control and interact with their computer by retrieving joint information. Microsoft enables a non-commercial Kinect SDK for gestures and spoken commands.

Kinect V2

The device features an RGB camera, depth sensor and multi-array microphone running proprietary software, which provide full-body 3D motion capture, facial recognition, voice recognition and acoustic source localization capabilities.

The depth sensor consists of an infrared laser projector combined with a monochrome CMOS sensor, which captures video data in 3D. Kinect is capable of simultaneously tracking up to six people. sensors output video at a frame rate of 15 Hz(low light) to 30 Hz. The default RGB video stream uses 8-bit VGA resolution (512 x 424 pixels) with a Bayer color filter, but the hardware is capable of resolutions up to 1920x1080 @30fps colour image. The Kinect sensor has a practical ranging limit of 1.2–4.5 m distance. The Kinect can process 2 gigabytes of data per second, so it uses USB 3. The SDK runs only on Windows 8.

Look at the color depth :)

You can retrieve this joint information as well as finger information.

Presentation software

1 begin;

2

3 array{

4 ellipse_graphic {

5 ellipse_width = 1;

6 ellipse_height = 1;

7 color = 255, 0, 0;

8 };

9 ellipse_graphic {

10 ellipse_width = 1;

11 ellipse_height = 1;

12 color = 0, 255, 0;

13 };

14 }arrayJoints;

15

16 picture {

17 text { font = "Arial"; font_size = 20; caption = "move a bit:)"; } cap;

18 x = 0; y = -400;

19 } pic;

20

21 begin_pcl;

22

23 array<vector> data[0]; # in centimeters

24 array<int> body_ids[0];

25 int counter = 0, dataBody;

26 string toScreen;

27

28 sub change_caption( string caption ) begin

29 cap.set_caption( caption, true );

30 pic.present()

31 end;

32

33 sub showJoint( int joint , int partNr) begin

34 pic.set_part_x(partNr+1,data[joint].x() * 10);

35 pic.set_part_y(partNr+1,data[joint].y() * 10);

36 if partNr <= 3 then

37 arrayJoints[partNr].set_dimensions(data[joint].z(),data[joint].z());

38 arrayJoints[partNr].redraw();

39 end;

40 end;

41

42 kinect k = new kinect();

43 depth_data dd = k.depth();

44 dd.RES_512x424;

45

46 body_tracker tracker = k.body();

47 tracker.set_seated( true );

48 tracker.start();

49

50 pic.present();

51 body_data bd;

52 loop until !is_null( bd ) begin

53 bd = tracker.get_new_body();

54 end;

55

56 #add the joints to picture

57 loop

58 int count = 1;

59 until count > 6 begin

60 pic.add_part(arrayJoints[count],0,0);

61 count = count + 1;

62 end;

63

64 dd.start();

65 loop until false begin

66 if (dd.new_data()) then

67 counter = counter + 1;

68 bd.get_positions( data, body_ids);

69 if data.count() > 0 then

70 showJoint(bd.HAND_RIGHT,1);

71 showJoint(bd.HAND_LEFT,2);

72 showJoint(bd.HEAD,3);

73 showJoint(bd.WRIST_RIGHT,4);

74 showJoint(bd.HAND_TIP_RIGHT ,5);

75 showJoint(bd.THUMB_RIGHT,6);

76 toScreen = "dataCount: " + string(counter) + "\n";

77 toScreen.append("data X: " + string(data[bd.HAND_RIGHT].x()) + "\n");

78 toScreen.append("data Y: " + string(data[bd.HAND_RIGHT].y()) + "\n");

79 toScreen.append("dataZ: " + string(data[bd.HAND_RIGHT].z()) + "\n");

80 change_caption( toScreen );

81 end;

82 end;

83 end;